Is Grok 3 the future of AI, or just another overhyped Musk project?

Introduction: What Is Grok 3?

The universe is a strange place. 13.8 billion years of chaos, and here we are—asking questions that even Google answers with ‘Try rephrasing.’ But now, there’s Grok 3.

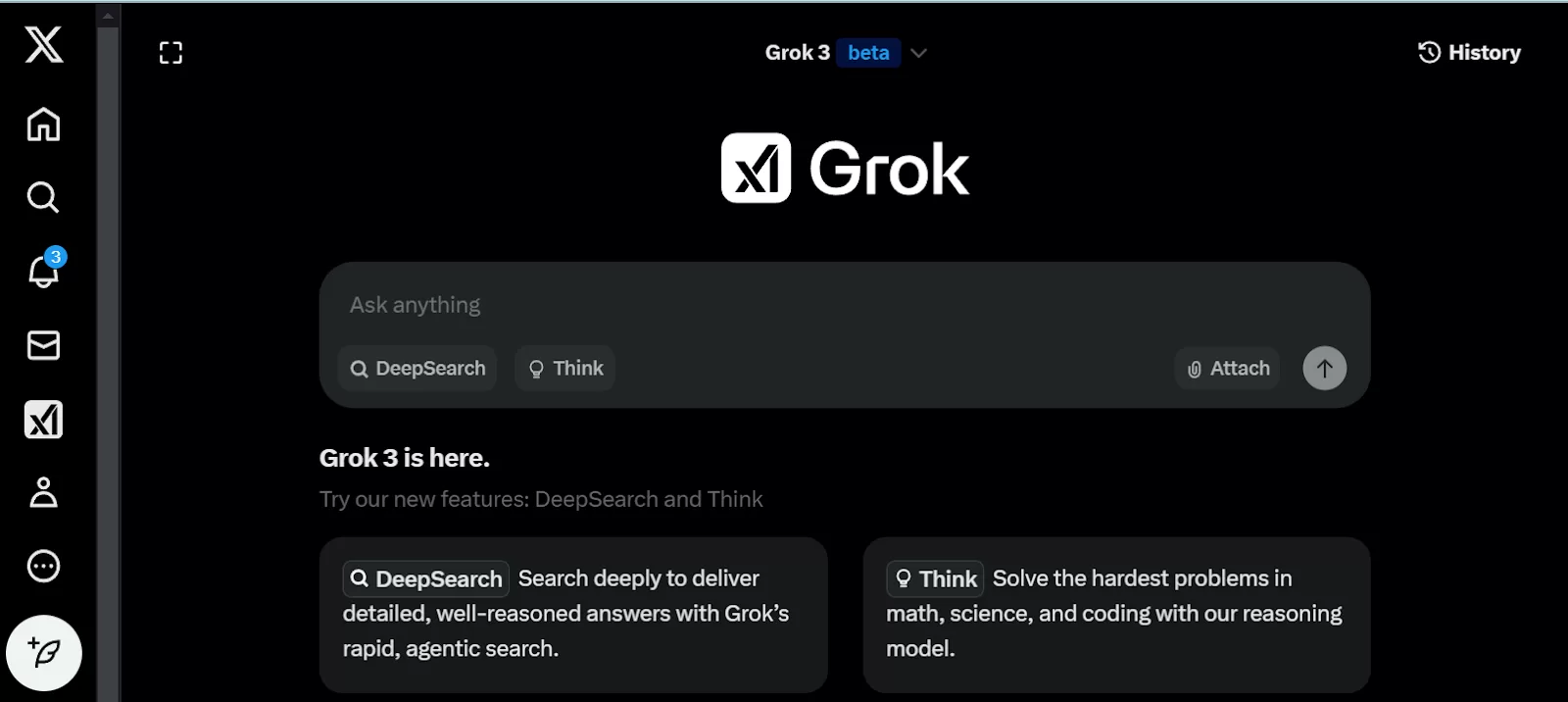

Enter Grok 3, the latest AI marvel from Elon Musk’s xAI, promising to be a less censored, more logical alternative to popular models like ChatGPT, Claude, and Gemini. According to Musk and xAI engineers, Grok 3 is 10 times more powerful than its predecessor, Grok 2. Powered by cutting-edge hardware, synthetic data, reinforcement learning, and human feedback, it boasts unique features like DeepSearch and context memory. But is it the AI game-changer it claims to be, or just another Musk-driven hype machine?

In this article, we’ll dissect Grok 3’s features, weigh its pros and cons, explore its place in Musk’s expansive ecosystem, and investigate whether it’s a true innovation or simply overpromised tech. Plus, we’ll dig into the data privacy concerns and what this means for you. Let’s dive in.

What Is Grok 3? The AI That Thinks Deeper

Grok 3 isn’t your run-of-the-mill chatbot. Built by xAI, it’s designed to think—not just generate text. At its core is a Mixture of Experts (MoE) architecture, which uses specialized submodels (or “experts”) to handle different tasks. Whether it’s solving a math problem or analyzing real-time news, Grok 3 dynamically selects the right experts for the job. This makes its responses feel more reasoned and precise, setting it apart from traditional language models that rely on general patterns.

What Is Grok 3 and How Does It Work?

Grok 3 is the latest iteration of Musk’s AI chatbot, developed by xAI. The model leverages Mixture of Experts (MoE) architecture, meaning it divides tasks among specialized AI subsystems rather than processing everything in a single neural network. This allows it to optimize performance for different types of queries, improving efficiency and accuracy.

Key Features of Grok 3:

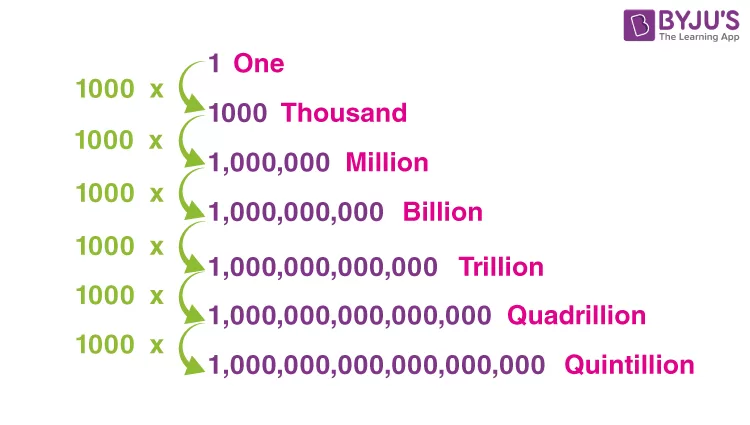

- 314 billion parameters—making it one of the largest AI models.

- DeepSearch technology—a feature that enables real-time search and fact verification.

- Optimized for logic and problem-solving, aiming to outperform science, coding, and reasoning competitors.

- Uses real-time X (Twitter) data—unlike ChatGPT, which relies on static datasets.

- Open-source model with Apache 2.0 license—providing transparency for developers.

How Is Grok 3 Better Than ChatGPT and DeepSeek?

| Feature | Grok 3 | ChatGPT (GPT-4) | DeepSeek |

|---|---|---|---|

| Processing Power | 314 billion parameters | ~1.76 trillion parameters | ~100-200 billion parameters |

| Data Access | Real-time X data | Static web datasets | Static datasets |

| DeepSearch | Yes | No | No |

| Transparency | Open-source | Closed-source | Closed-source |

| Logic & Math Skills | Optimized | Standard | Competitive, specifics unknown |

As of February 26, 2025, Grok-3 remains significantly weaker than OpenAI’s GPT models in overall intelligence, versatility, and raw processing power. OpenAI still leads the industry, with GPT maintaining a clear advantage in both scale and performance. However, Grok-3’s potential is undeniable. Unlike its competitors, it offers real-time data access via X, an open-source framework, and API integration for businesses—features that, with further development, could open massive opportunities in AI-driven automation and data analysis. While its DeepSearch function enhances web summarization, and its logic/math optimizations show promise, Grok-3 is not yet a true rival to GPT. In my other research, I examined Musk’s broader strategy, which suggests that Grok-3 is just the beginning of a larger play in AI dominance. For now, OpenAI remains ahead, but Grok-3’s unique features and open-source nature make it a model worth watching.

Grok-3 vs. OpenAI: Who’s the Better AI, and Is It Worth the Subscription?

After testing both models, it’s clear that Grok-3 is no match for OpenAI’s GPT models, especially in deep research and content analysis. While Grok-3 processes information faster, its depth and quality fall significantly short compared to OpenAI. For instance, Grok-3 completes a deep search in about one minute, reviewing 60 links and generating 2-3 pages of text. Meanwhile, OpenAI takes 20 minutes, analyzes 20 links, but delivers 20 pages of high-quality analysis—a significant difference in thoroughness and insight.

That said, Grok-3 has its strengths, particularly in real-time data access via X, making it useful for tracking trending topics, summarizing breaking news, and processing fresh online data. Unlike OpenAI, it is open-source, offers API integration for businesses, and has features that, with further development, could unlock massive opportunities in automation, AI-driven research, and business applications.

Should You Buy a Grok-3 Subscription?

It depends on your needs. If you’re looking for in-depth analysis, high-quality research, and complex reasoning, OpenAI is still the better and more powerful AI in 2025. However, if you want real-time social media analysis, open-source flexibility, and a model that will evolve rapidly, Grok-3 may be worth considering—especially for those who want to experiment with its API.

Musk’s vision with xAI is long-term, and while Grok-3 isn’t competing head-to-head with OpenAI yet, its unique approach and open-source model mean it could evolve into something truly groundbreaking in the future. For now, though, OpenAI remains the dominant force in AI.

If you already have an OpenAI subscription, it’s probably the better investment for now, especially since their deep research feature offers 10 advanced searches per month—something Grok-3 currently cannot compete with.

Grok-3: The Downsides You Need to Know

While Grok-3 introduces innovative features like real-time data access, an open-source model, and API integration for businesses, it still significantly lags behind OpenAI’s GPT models in 2025. Here are the key disadvantages and limitations that users should consider before switching to Grok-3.

Limitations for Free Users

- “Think” Mode Restrictions – You can only use the advanced reasoning mode twice every 24 hours.

- “DeepSearch” Limitations – Grok-3 allows only 2 DeepSearch requests per day, making it unsuitable for heavy research tasks.

- 24-Hour Reset – Once you hit these limits, you must wait until the next 24-hour cycle to regain access.

Grok-3 Cons

- Does not save prompts or results after a glitch – If the session crashes, all previous queries and responses are lost, unlike OpenAI, which retains history.

- Provides false information – Users report that Grok-3 often generates misleading or entirely fabricated responses, making it unreliable for fact-based work.

- Suggests broken or non-existent links – Many links provided in DeepSearch mode either do not exist or are outdated, making fact-checking frustrating.

- Fails to understand requests – Struggles with contextual accuracy, sometimes giving vague or completely off-topic answers.

- Confuses tasks within one dialogue – In longer conversations, Grok-3 mixes up previous prompts, making follow-ups difficult.

- Crashes and forgets questions – Unlike GPT, Grok-3 often freezes and does not save previous conversations, forcing users to start over.

Privacy Concerns & User Complaints

Lack of intelligence in responses – Many online reviews suggest Grok-3 is not “smart enough”, with too many made-up answers, reducing its usefulness for serious tasks.

Potential X Profile Analysis – Some speculate that Grok-3 can analyze X (formerly Twitter) user profiles and posts, raising concerns about data privacy.

Unreliable DeepSearch Mode – Some users claim Grok-3 fabricates facts or references non-existent sources, especially when using DeepSearch, making it untrustworthy for critical research.

Why Grog can “think” and not just substitute semantically similar words

Despite Grok-3 is not good enough at the moment in 2025 – it does have huge potential in the future. Elon Musk found a solid foundation with vast functionality and expensive computers for learning. In this chapter let’s review the main features, but if you would like to go deeper into open code investigation to know more about the technical characteristics of AI – you are welcome to read my article.

How Grok 3 Learned

Synthetic Data: Uses AI-generated data sets that mimic the real world (like pilots train in simulators before flying).

Self-Correction Mechanisms: Grok double-checks its answers, reducing errors and illogical statements.

Reinforcement Learning: Uses a reward system for correct answers, similar to how AI learns to play chess or Go.

Human Feedback: Real people evaluate and correct the AI’s answers to improve accuracy.

Contextual Learning: Grok remembers previous chat messages and analyzes important parts of the dialogue to make more meaningful responses.

Grok, developed by xAI, is positioned as a language model that stands out among other large language models (LLMs) due to its ability to do deeper and more meaningful analysis, rather than simply generating text based on statistical patterns. But what exactly is it that allows Grok to “think” in this sense? Let’s break down the key features.

A mixture of Experts (MoE) – the core of Grok’s “thinking”

The key feature of Grok that sets it apart from other LLMs is its Mixture of Experts (MoE) architecture. This is not just a single neural network, but a system consisting of many specialized submodels, or “experts.” Each of these experts is trained to work on certain types of problems or areas of knowledge. When Grok receives a request, it dynamically selects the most appropriate experts to handle it.

How does it work? Instead of relying on general language patterns, as many traditional LLMs do, Grok distributes the task among the experts who are best suited to solve it. For example, if the request requires mathematical calculations, the math experts are activated, while if it requires news analysis, the news experts are activated.

Why is this important? This approach allows Grok to go beyond just “filling in words” and apply specialized knowledge, making its answers more accurate and contextually meaningful. This creates the impression of deeper “thinking” as the model can combine different approaches and take into account the specifics of the task.

Additional factors: truthfulness and relevance

In addition to MoE, Grok is designed with truthfulness and relevance in mind. It is trained and tuned to minimize the “hallucinations” typical of LLMs – cases where the model produces plausible but false statements. This reinforces the perception of Grok as a model that strives for accuracy and depth, rather than just linguistic fluency.

In addition, Grok has direct access to the X platform (formerly Twitter), allowing it to take into account relevant information in real time. This adds flexibility and contextual relevance to its answers, distinguishing it from models that rely only on static data.

Limitations: “Thinking” as a metaphor

Despite these advanced features, it is important to note that Grok does not have true human thinking. Like other LLMs, it is computationally and data-driven, not conscious. While its MoE architecture and focus on truthfulness make its answers better, it can still make mistakes and generate inaccuracies.

Bottom Line

The key feature of Grok that allows it to stand out and create the impression of “thinking” is its Mixture of Experts (MoE) architecture. This allows the model to use specialized knowledge to analyze problems more deeply and accurately, rather than simply collecting and substituting words like many other LLMs. Truthfulness support and access to up-to-date data enhance this effect, although Grok remains a simulation of thinking, not a true embodiment of it.

How Much Funding Has Been Raised?

xAI Total Funding:

As of June 2024, xAI had raised $6 billion in funding at a $24 billion valuation (source: TechCrunch, June 2024).

In February 2025, Bloomberg reported that xAI was in talks for a new $10 billion round, which would raise the valuation to $75 billion (source: Bloomberg, February 17, 2025).

Infrastructure:

xAI has invested heavily in the Colossus supercomputer in Memphis. In 2024, it used 100,000 Nvidia H100 GPUs, and for Grok-3, that number was doubled to 200,000 (source: CNBC, February 18, 2025). The cost of such a cluster is estimated at $4-5 billion (the price of H100 is ~$40,000 per unit).

Comparison of computing power

Supercomputers and cost for Grok and other projects. Here’s the table:

| Project | Supercomputer | Power (FLOPS) | Cost | Source |

| xAI (Grok-3) | Colossus | ~200 PFLOPS (оценка) | ~$4–5 млрд | CNBC, 18 февраля 2025 |

| OpenAI (GPT-4) | Azure Cluster | ~300 PFLOPS (оценка) | ~$2–3 млрд (оценка) | Forbes, 2023 (оценка) |

| Google (Gemini) | TPU v5 Cluster | ~500 PFLOPS (оценка) | ~$3–4 млрд (оценка) | Google Cloud Blog, 2024 |

| DeepSeek (V3) | Custom Cluster | ~150 PFLOPS (оценка) | ~$1 млрд (оценка) | DeepSeek Paper, январь 2025 |

Key Advantages of Colossus (Grok-3’s Supercomputer)

- Raw Compute Power – 200,000 Nvidia H100 GPUs provide massive parallel processing, making it a cutting-edge AI training infrastructure.

- Full Control & Independence – Unlike OpenAI (Azure Cloud) or Google (TPU chips), xAI owns its entire AI infrastructure, avoiding reliance on external providers.

- Future-Proof Scaling – Built to handle more advanced AI models, possibly for Tesla’s self-driving AI, Neuralink, or Mars simulations.

- Real-Time Data Processing – Optimized for DeepSearch and real-time AI, leveraging X (Twitter) data in a way competitors can’t.

- Potential for Multi-Domain AI – Could go beyond chatbots, supporting AI in robotics, automation, and other Musk ventures.

💡 Biggest downside: Most expensive per FLOP, still weaker than Google’s TPU v5 in raw power. 🚀

X Revenue:

Part of the funding comes from X Premium+ subscriptions, which increased to $30/month after the launch of Grok-3 (source: TechCrunch, February 19, 2025).

Grok Cost Estimate: No direct data, but given Colossus and development costs, Grok-3 likely accounted for $2-3 billion of xAI’s total budget.

Another Hype or a Game-Changer?

Arguments for Hype:

- Model release deadlines are often delayed: Grok-3 was initially planned for late 2024 but was only released in February 2025 (source: TechCrunch, January 2, 2025).

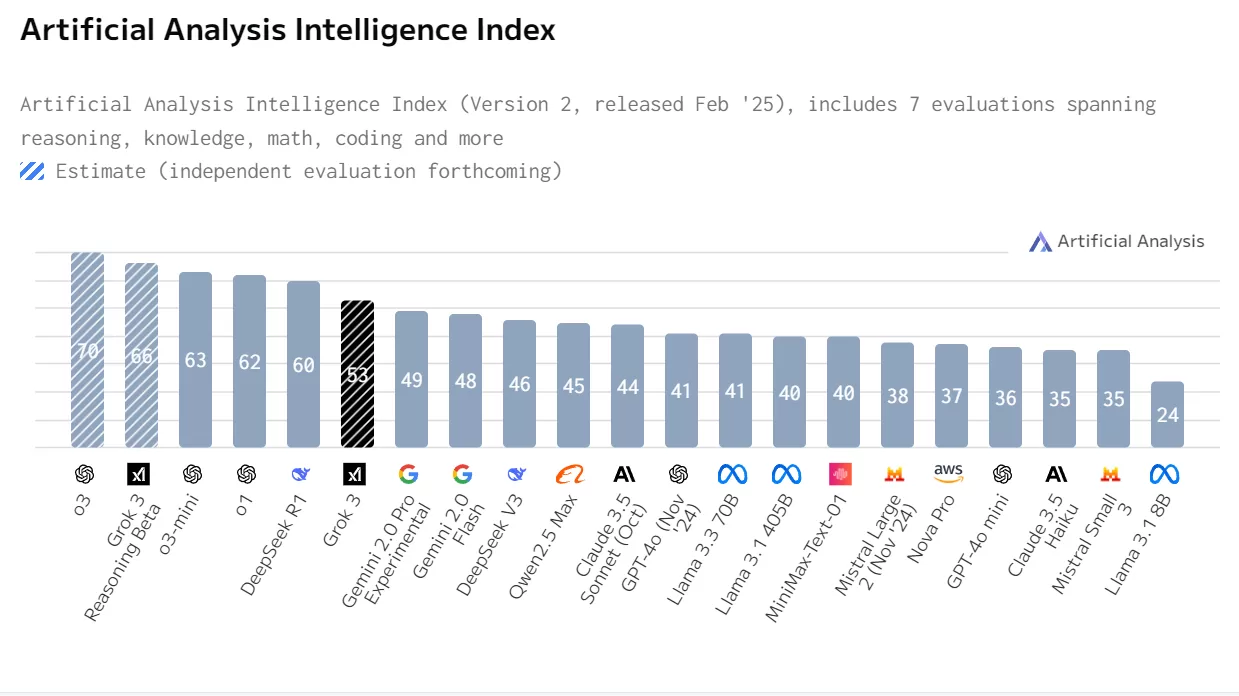

- Musk’s bold claims (“the smartest AI in the world”) are not always backed by independent tests. For example, Grok-3 falls behind OpenAI o3 in the Artificial Analysis Quality Index (source: NBC News, February 18, 2025).

Ethics and Bias: Critics argue that Grok’s so-called “truth-seeking” nature may reflect Musk’s personal views rather than true objectivity (source: gettothetext.com, February 18, 2025).

Arguments for Reality:

- Rapid Progress: The transition from Grok-1 to Grok-3 in just two years is an impressive pace for AI development.

- Real-Time Data Integration: Unlike competitors, Grok has access to real-time data from X, giving it a unique advantage (source: Android Police, April 26, 2024).

- Benchmarks: xAI claims that Grok-3 outperforms GPT-4o in mathematics, science, and coding (source: CNBC, February 18, 2025). You can verify these results independently on lmarena: https://lmarena.ai/.

My Conclusion:

Unlike its competitors, Grok-3 is open-source, integrates with real-time X (Twitter) data, and offers an API for business automation, creating opportunities for future advancements. xAI’s Colossus supercomputer, while the most expensive per FLOP, ensures Musk’s full control over infrastructure, making it a long-term strategic investment.

Is X (Formerly Twitter) Providing Data for Grok?

The well-known tech publication TechCrunch has already published guidelines on how to protect your confidential data on Twitter—hinting that data from X may indeed be used for AI development. When Musk first acquired Twitter, many speculated about the real reason behind the purchase. Now, the bigger picture is starting to come together. Musk’s growing monopoly spans multiple cutting-edge industries: space exploration, cryptocurrency, AI, electric vehicles, and alternative energy.

Musk has not disclosed the exact sources of training data beyond vague references. For instance, while it’s confirmed that X content is used in training, the nature of other web data, synthetic inputs, and proprietary datasets (such as Tesla data, as Bloomberg speculated in 2024) remains unclear. This lack of transparency raises concerns about potential bias and ethical issues, especially considering that Grok-1 was trained on web data up until Q3 2023 (xAI blog, 2024), while Grok-3’s dataset is likely much larger, yet undisclosed.

What Musk Says:

Musk has promised that Grok-2’s code will be open-sourced once Grok-3 is stable (CNN, February 18, 2025). He frames this as a commitment to transparency, particularly after criticizing OpenAI’s lack of openness.

What He Doesn’t Say:

- Musk hasn’t clarified what exactly will be open-sourced.

- Grok-1 was released under the Apache 2.0 license, but while it included the model weights, the training data was not disclosed (TechCrunch, March 18, 2024).

- The Open Source Initiative argues that this is not true open-source (Euronews, March 28, 2024), and Musk has not confirmed whether Grok-3 will be fully transparent.

Conclusion:

While Grok-3 represents a bold step forward for xAI, it remains overshadowed by OpenAI, which, as of February 2025, still leads in AI power and capabilities. Despite Musk’s improvements—making Grok-3 faster and addressing previous weaknesses—the model is not yet strong enough to rival the industry’s top players. However, its potential is enormous. With exclusive access to real-time X data, a rapidly evolving architecture, and Musk’s relentless drive for disruption, Grok-3 could become a formidable AI in the coming years. Whether it will truly challenge OpenAI or remain an underdog depends on xAI’s ability to refine its technology, expand its dataset, and prove its real-world value beyond the hype.

No responses yet