With four years in the ever-changing landscape of digital marketing and content creation, I’ve seen trends come and go, strategies evolve, and algorithms shift. One question has consistently hovered over our SEO efforts: Is AI hurting our SEO?

Many clients and colleagues advocate for a human-centered approach, saying, “No AI.” Interestingly, even when we create content by hand, AI checkers sometimes suspect it’s AI-generated. The same dilemma occurs with plagiarism.

This article will explore the relationship between AI-generated content and SEO rankings. We’ll discuss how AI content creation impacts SEO, AI detection tools, and the importance of human intervention in content quality. We’ll also explore Google’s stance on AI content and its relevance to search ranking. If you are a marketing specialist, we also discussed the best AI image generators. Images for this article were created in Midjorney.

Table of content

- What is LLM?

- How does Chat GPT work?

- Does AI think like a human?

- Why LLM can’t replace humans?

- AI detectors

- What is a large language model (LLM)?

- Did AI Write the Bible?

- Why does an AI detector detect human text as AI?

- Is it important for SEO ranking to have no AI-generated text?

- Where should you focus instead?

- By the way, which text was written by AI?

- FAQ

What is LLM?

Understanding if AI-generated texts influence SEO rankings is the primary key to understanding its core principles. Imagine you have a great database of all the information – that AI generators have. Then, people develop the models that operate with that data in a certain way. There are a lot of AI tools created nowadays, however, if we speak about text generation applications, they have their own specific.

To become more professional, we will use the term LLM. A large language model (LLM) is an AI algorithm that uses deep learning techniques and massively large data sets to understand, summarize, generate, and predict new content. In simple words, all AI applications create the content.

The most common and free models are:

- Chat GPT: Think of it as a talking robot. It can understand and talk like a human. It’s used in chatbots and even helps with writing.

- LLaMa: This helps Facebook understand what you write and suggest things when typing.

- Anthropic Claude: It’s like a genius language machine, assisting researchers to solve tricky language problems.

- Google Palm: Google’s wizard for understanding and translating languages.

- Falcon: Like a super-fast writer who can help create text and summaries.

- Claude.ai: Another language expert who adds understanding and writing skills to AI.

Hugging Face allows you to access, fine-tune, and integrate pre-trained natural language processing models, making developing AI applications for tasks like chatbots, text generation, and language understanding easier. It also keeps you updated with the latest NLP research and fosters collaboration within the AI community.

Open Al was the first company to develop the idea of making neural meshes based on the Transformers model. And make them significant.

Popular LLMs in 2023

| Model | Developer | Key Features |

| GPT-4 | OpenAI | Multimodal, complex reasoning, academic skills |

| BLOOM | BigScience | Efficiency and open-source |

| GPT-J | Eleuther AI | Open-source and large model |

| Copy AI | Copy AI | Blog, sales, and ad copy generation |

| Contenda | Contenda | Legal document generation |

| Cohere | Cohere | Chatbot content generation |

| Jasper AI | Jasper AI | Email content generation |

| ChatGPT | OpenAI | Chatbot for customer support and more |

LLMs have trillions of words in their database, including data from Wikipedia, all books ever published on the Internet, and many other sources.

The next crucial step is comprehending how these models utilize the extensive data.

How does Chat GPT work?

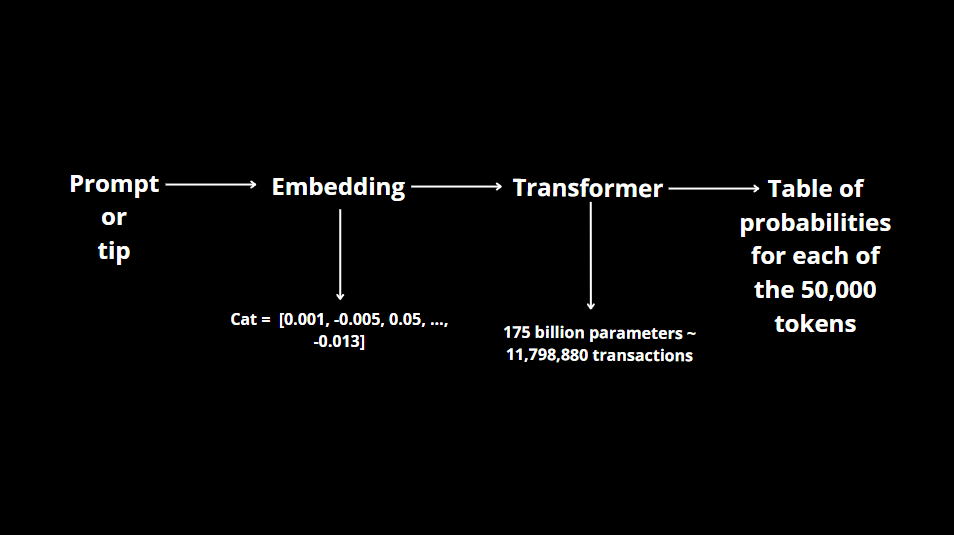

Let’s delve deeper into understanding with an example using the widely used application – Chat GPT. To do so, we’ll employ visualization.

Tokens

It’s essential to note that the model doesn’t grasp words traditionally; instead, it comprehends tokens. The model predicts the next token in a sequence from a technical viewpoint. In this context, a token represents a text unit and can be considered the number of syllables in a word. This distinction is fundamental for understanding how the model processes and generates text.

You can visit the website to explore how tokenization works for OpenAI's GPT-3 models.

Once ChatGPT takes the initial input, it tries to predict the following possible input based on the previous information. It picks the entire list of prior tokens and, using its coding synthesis, tries to preempt the subsequent input by the user. It utilizes and predicts one token at a time to make it simpler for the language to comprehend the usage.

To enhance predictions and improve accuracy, it picks up the entire list of embeddings and passes it through transformer layers to establish associations between words. For example, when you ask a question like “Who’s Thomas Edison?” ChatGPT picks up the most valuable words in the list: Who and Edison.

Transformer layers

Transformer layers have their role to play in the entire process. These layers are a form of neural network architecture trained to pick up the most relevant keywords from a string of words. However, the training process isn’t as simple as it sounds, for it takes a long time to train transformer layers on gigabytes of data.

In Chat GPT, the model understands the relationships and interconnections between tokens. It uses this knowledge to predict and generate text that maintains conversation context and coherence. This ability to recognize how tokens relate to one another enables the model to produce meaningful and contextually relevant responses in a conversation.

Contextual Analysis:

- AI models like Chat GPT perform contextual analysis by considering the surrounding tokens in a text sequence. They evaluate the probabilities of different tokens based on the context of the preceding tokens. This contextual understanding allows them to generate coherent and contextually relevant responses.

Probabilities:

- AI models’ probability represents the likelihood of a specific token or word appearing in a given context. AI models calculate these probabilities to predict the next token in a sequence. The token with the highest probability is typically chosen as the next prediction.

Set of Meanings:

- AI models also associate tokens with a set of possible meanings, which can be related to the token’s semantics, syntax, or context. Understanding these meanings is crucial for generating text that accurately conveys information and context.

LLMs work principle

Let’s delve into the inner workings of LLM applications to address a fundamental question: Can AI generate text that rivals human writing?

In the following exploration, we will dissect the mechanisms that power LLMs, offering insights into how they comprehend language, the nuances of meaning space, and their ability to craft text that emulates human expression.

Embedding

Embedding (Vector Representation): Imagine every word or piece of a word (like “cat”) is turned into a special number sequence, like a secret code. This sequence comprises about 1,500 numbers that capture the essence or meaning of that word.

For Example, Take the word “Cat.” It’s become a unique code, like [0.001, -0.005, 0.05, …, -0.013]. These numbers represent what “cat” means in the language of the model.

Why Meaning Space Matters: Think of all these word codes as stars in the sky. The closer two stars are, the more similar their meanings. For our model, “cat” is close to words like “mouse,” “owner,” “dog,” and “pets” because they all relate to animals and pets. But it’s far from words like “financial accounting” because that’s a very different topic. So, the meaning space helps the model understand how words are related, making its text more sensible and relevant.

You can learn more about this in the OpenAI blog.

Understanding the World Order Model: The distance between stars (models) helps the AI understand the “world order.” It means the AI can decide which words to put in a sentence to make sense.

Transformer Interaction: The AI uses a ” Transformer ” system that’s like a chain of interactions. It helps the AI understand how words relate to each other and how to arrange them in a sentence.

Picking the Most Likely Word: After all these interactions, the AI looks at the stars (words) and picks the most likely to come next in a sentence. This way, it creates text that makes sense and fits the context:

How to “teach” GPT?

What does GPT chat look like to a person? What do we mean by a trained model?

A “trained model” in the context of GPT refers to an AI model exposed to extensive data and learned patterns, context, and language use from that data. It’s like teaching the AI to understand and generate text by showing it a vast amount of text from the internet.

When you ask for the first 5 lines of Schopenhauer’s “The World as Will and Representation,” it can provide those lines because it has absorbed a wide range of knowledge up to its training cutoff date. It can generate text that resembles a coherent excerpt from the book, even though it’s not directly accessible.

But if I ask for a comment on Reddit – it won’t tell me for sure:

Why?

Contextual Understanding: Generating a meaningful comment on a specific Reddit topic or post requires current context, which the AI might not have. It can provide general information and responses, but its responses might not be up-to-date or accurate for current events or specific discussions.

GPT-3 learns from massive text data, breaks text into smaller units (tokens), and predicts what comes next based on known patterns. It can generate responses that fit the context, like a text prediction tool.

Theory of Mind

Moreover, it goes beyond mere repetition. GPT-3 can conclude and determine what’s essential in a given context. This ability to understand the nuances of language and user intent is called the “Theory of Mind,” making it more than just a repetition tool but a context-aware, human-like language model.

Theory of Mind – This is currently the third level of AI and understands the needs of other intelligent entities. Machines aim to have the capability to understand and remember other entities’ emotions and needs and adjust their behavior based on these, such as humans in social interaction.

Does AI think like a human?

So, AI thinks with the same analogies and logical chains as humans. Yes.

The intertwining of semantic meanings is a normal and essential aspect of how language and communication work for humans and artificial intelligence. It reflects the interconnected nature of language and thought.

Semantic meanings refer to the meanings of words, phrases, or sentences in the context of a specific language. These meanings are not isolated but are intricately connected, creating a web of associations and relationships.

The primary argument for why Large Language Models (LLMs) like GPT-3 can generate text that closely resembles human-generated text is their ability to understand and replicate the intertwined semantic meanings fundamental to human language and communication.

Here’s a breakdown of this argument

- Semantic Understanding: LLMs are designed based on neural networks that excel at understanding the semantic meaning of text. They analyze how words and phrases relate to each other within the context in which they appear. This semantic understanding is crucial for generating text that makes sense, just as humans do when considering the meaning of their words.

- Pattern Recognition: LLMs learn from massive datasets containing vast amounts of human-generated text. In this learning process, they recognize patterns in how words and phrases convey meaning. These patterns reflect how humans structure and communicate their thoughts, making the generated text resemble human language.

- Contextual Awareness: LLMs can retain and utilize context in their text generation. They remember what has been said earlier in a conversation or text and use this context to produce coherent and contextually relevant responses. This mirrors how humans maintain conversations, relying on context to understand and respond appropriately.

- Variation and Creativity: LLMs can generate creative and varied text while adhering to learned patterns. This variability is essential for producing human-like text because humans express themselves differently and do not repeat the same phrases verbatim.

If you want to dive deeper

For a more in-depth understanding of how Large Language Models like GPT-3 work and why they can generate human-like text, I recommend reading Stephen Wolfram’s comprehensive article: What Is ChatGPT Doing, and Why Does It Work?

Stephen Wolfram provides valuable insights into the underlying mechanisms and processes that enable these models to understand and generate text closely resembling human language. It’s an excellent resource for anyone exploring the topic in greater detail.

Why LLM can’t replace humans?

Large Language Models (LLMs) like GPT-3 have significantly advanced natural language processing and text generation. However, there are several reasons why LLMs can’t entirely replace humans:

Lack of Creativity and Innovation: LLMs primarily generate text based on patterns and information from their training data. While they can produce human-like text, they lack creativity and the ability to generate entirely new ideas or concepts. They rely on existing data and patterns, making them less suited for tasks that require genuine innovation.

No Fact-Checking Capability: LLMs cannot verify the accuracy or reliability of the information they generate. They lack access to the internet or databases of real-time, factual information. As a result, they may generate factually incorrect or outdated content. Human fact-checking and validation are essential in scenarios where accurate and up-to-date information is crucial.

Real-world example

While working on an article about outsourcing in Latin America, I stumbled upon a citation trend that seemed like a goldmine for boosting the article’s SEO. It appeared to be widely referenced and popular. However, my investigation revealed a concerning truth: no concrete evidence supported this data. There was a lack of academic backing, research sources, or any credible proof. This trend resembled a ghost that AI might have conjured up.

As it turns out, a high-ranked website had propagated this data, and other writers keen to enhance their content’s visibility linked to it.

The result?

Misinformation was spreading like wildfire.

This experience underscored the vital role of human researchers in fact-checking and verifying information, especially in the era of AI-generated content.

Contextual Understanding and Inference: LLMs can understand and generate text based on context, but they do not possess true comprehension or the ability to make complex inferences. Humans excel at understanding the subtle nuances, emotions, and deeper meanings in language, which are challenging for machines to grasp fully.

Lack of Critical Thinking: LLMs lack critical thinking skills. They cannot critically evaluate arguments, assess the validity of claims, or provide reasoned judgments. Humans are adept at critical thinking and decision-making, which are vital in various domains, such as legal, medical, and ethical contexts.

No Real-World Experience: LLMs do not have real-world experiences or the ability to apply knowledge gained through life experiences. Humans can draw from their experiences and intuition, often essential in decision-making and problem-solving.

Emotion and Empathy: LLMs do not possess emotions or empathy. They cannot understand or respond to emotional nuances in communication effectively. In contrast, humans excel at understanding and responding to emotions, making them indispensable in fields like counseling, therapy, and emotional support.

Ethical and Moral Judgment: LLMs lack moral and ethical judgment. They do not possess a sense of right or wrong. Humans are responsible for making ethical decisions and must consider ethical principles, cultural norms, and values when addressing complex moral dilemmas.

Complex Interpersonal Communication: LLMs are limited in their ability to engage in complex interpersonal communication. They may struggle with tasks requiring negotiation, diplomacy, and sensitive or delicate conversations.

AI detectors

An LLM checker is a tool or system designed to identify whether a given text has been generated by an LLM, such as GPT-3 or similar models. These checkers are developed to distinguish between human-generated text and text generated by artificial intelligence models. They work by analyzing the text’s patterns, structures, and other characteristics to determine whether it exhibits signs of being AI-generated.

However, it’s important to note that LLM checkers may not always be 100% accurate and can sometimes produce false positives or negatives.

What is a large language model (LLM)?

The central idea is that LLMs closely mimic human semantic connections and associations in their text generation. They learn from humans, adopting logical and semantic connections in their language production. Despite their imperfections, their primary goal is to replicate human-like writing.

| AI Detector Name | Website | Description |

| Scribbr | Scribbr AI Detector | Confidently detects GPT2, GPT3, and GPT3.5. Check all possible sections of text for AI with our Scribbr AI Detector. |

| Writer | AI Content Detector by Writer | Check what percentage of your content is seen as human-generated with this free AI content detector tool. Paste in text or a URL to find out. |

| Copyleaks | Copyleaks AI Content Detector | The AI Content Detector is the only platform with a high confidence rate in detecting AI-generated text that has been potentially plagiarized and/or paraphrased. |

| GPTZero | GPTZero AI Detector | GPTZero is the gold standard in AI detection, trained to detect ChatGPT, GPT4, Bard, LLaMa, and other AI models. |

| ContentDetector.AI | ContentDetector.AI | Accurate and Free AI Detector and Chat GPT Detector. This AI checker and AI Content Detector can be used to check ChatGPT Plagiarism without limitations. |

| Duplichecker.com | AI Detector by Duplichecker.com | DupliChecker’s AI detector is 100% accurate. This tool is based on advanced algorithms that deeply analyze your entered text and identify whether some automated. |

| Smodin | AI Content Detector by Smodin | Smodin’s AI content detector is an advanced tool that can distinguish between human-written content and text generated by ChatGPT, Bard, or other AI tools. |

| Crossplag | AI Detector by Crossplag | DupliChecker’s AI detector is 100% accurate. This tool is based on advanced algorithms that analyze your entered text deeply and identify whether some of it is automated. |

| Undetectable AI | Undetectable AI | Undetectable AI is a tool designed to detect AI-written text and make AI-generated content more human. |

| ZeroGPT | ZeroGPT – Accurate Chat GPT, GPT4 & AI Text Detector Tool | An AI detector for text, including blog posts, essays, and more. |

| Sapling | AI Detector by Sapling | AI detector for whether text is AI-generated or not, giving predictions for each portion of the text. |

| Detecting-AI.com | Detecting-AI.com | An AI detector for various written content, including documents, articles, social media messages, and website content. |

| Originality AI | Originality AI | Information about Originality AI, a tool designed for assessing the originality of content. |

Video about LLM

AI detectors struggle to accurately attribute authorship to texts generated by LLMs. Moreover, their prospects for future business development appear limited. They mainly attract attention from entrepreneurs influenced by marketing campaigns but face significant challenges in distinguishing AI-generated content from human-authored text effectively.

To provide concrete evidence, I experimented. I crafted a text and subjected it to an AI detection tool. The result was surprising: the tool confidently labeled the text as the work of artificial intelligence.

You can watch the full video here.

Did AI Write the Bible?

Diving further into the experiment, I decided to analyze the text of the Bible. When examined in its original format, the LLM checker confidently identified it as being written by a human, with a 98% accuracy rate. Quite an achievement!

But here’s where it gets intriguing. What if we stripped away all the numbers and treated them as text? The result was remarkable. The LLM checker indicated that, without the numerical elements, approximately 74% of the text seemed to be generated by AI.

This fascinating outcome demonstrates the subtle complexities of distinguishing human from AI-generated content and invites you to try it out using even the most popular Bible online.

Why does an AI detector detect human text as AI?

The phenomenon of AI detecting human-produced text as AI-generated can occur for several reasons, including the limitations of the algorithms and models used for detection. Here are some key factors contributing to this issue:

Limited Training Data

AI models designed to detect AI-generated text are typically trained on large datasets that contain examples of AI-generated text. However, these datasets may not cover the full diversity of human-written text across all domain styles. As a result, the model may sometimes struggle to distinguish between certain human-written content and AI-generated content.

Similar Language Patterns

Human-written and AI-generated texts often follow language patterns, grammar rules, and semantic meanings. When AI models are trained to identify anomalies or deviations from these patterns, they might flag some human-written text as AI-generated if it exhibits unusual or atypical characteristics.

Evolution of AI

AI models are continually evolving and becoming more sophisticated. Some AI-generated text, particularly advanced language models, can closely mimic human writing styles and idioms, making it challenging to differentiate from human-written content.

Context dependence

Detecting AI-generated text often relies on context. In some contexts, specific phrases or content may seem more likely to come from AI, while in others, the exact text could appear entirely human-written. Contextual cues can be subtle and complex, leading to occasional misclassifications.

Semantic Overlap

As we already mentioned, AI models understand the semantic meanings of words based on their training data. However, words and phrases can have multiple meanings and associations, and the model’s interpretation may not always align perfectly with human intuition. This can result in false positives when identifying AI-generated text.

Is it important for SEO ranking to have no AI-generated text?

Google has unequivocally stated that the origin of the human or AI-generated content does not affect search ranking. The key to success lies in the quality of the content itself. To maintain good standing with Google’s ranking system, content creators should ensure their material is beneficial, unique, and pertinent. This aligns perfectly with Google’s E-E-A-T framework, which prioritizes content that is:

- Helpful to users.

- Demonstrates expertise in the subject matter.

- It is published on authoritative platforms.

- It is considered trustworthy and reliable. In essence, the rise of AI in content creation doesn’t alter these fundamental criteria for SEO success.

AI and SEO Manipulation: Google maintains strict policies against using automation, including AI, to create content with the sole purpose of manipulating search rankings. Such practices are considered spam and are against Google’s guidelines.

A Responsible Approach: Google emphasizes the responsible use of AI in content creation while maintaining high standards for information quality and the helpfulness of content in Search.

Of course, when utilizing AI, it’s imperative to do so thoughtfully and strategically. Relying solely on AI to generate content without any human input can lead to issues. The best approach is a balanced one: start with your own concepts and ideas, verify data for accuracy, create the structural framework, and incorporate insights from contemporary sources.

By complementing AI’s capabilities with human ingenuity, you can ensure the content maintains a high standard of quality and relevance. This way, the risk of AI-generated content being perceived as spam or damaging SEO rankings is significantly reduced, and your brand’s reputation remains intact.

Where should you focus instead?

Instead of focusing solely on AI-generated content, it’s crucial to allocate your attention to the following aspects that concern quality SEO text generation:

1. Duplicate Content: Avoid publishing duplicate content as it can harm your SEO. Make sure your content is unique and provides value to your audience.

2. Senses: AI may lack the ability to create practical new insights, especially in specific niches. Ensure that your content resonates with the intended audience and serves a purpose.

3. Fact-Checking: Always fact-check AI-generated content to ensure accuracy and credibility. Incorrect information can damage your reputation and rankings.

4. Style: Pay attention to the style of your content. Whether academic or friendly, tailor your writing to your target audience for maximum engagement.

5. Linking: Incorporate relevant and high-quality links in your content. This can improve SEO and provide additional resources for your readers.

6. Words Search: Optimize your content for relevant keywords. Keyword research and strategic placement can boost your content’s discoverability.

7. Formatting: Proper formatting, including headings, bullet points, and visuals, enhances the readability and user experience of your content.

By the way, which text was written by AI?

In conclusion, the debate over the impact of AI-generated content on SEO rankings remains ongoing. Still, Google has clarified that content’s origin, whether human or AI, is not a ranking factor. The focus should be on the content’s quality, relevance, and trustworthiness. The balance between AI and human input is essential to maintain high content standards.

AI detectors may sometimes misidentify AI-generated text as human and vice versa due to various factors, including semantic overlap and the evolution of AI technology.

Instead of fixating solely on AI-generated content, it’s vital to concentrate on issues like avoiding duplicate content, ensuring factual accuracy, tailoring the style to the audience, proper formatting, and strategic keyword optimization. These factors play a more significant role in SEO success.

Ultimately, whether AI or a human created the text, the focus should always be on delivering valuable content that meets the E-A-T criteria – expertise, authoritativeness, and trustworthiness. This balanced approach ensures content quality and maintains a positive SEO ranking.

FAQ

Is AI multilingual?

Yes, AI does not understand the language in which you are communicating with it. It processes text based on patterns and data, regardless of the language.

Can AI understand human emotions?

Some AI systems can recognize and respond to human emotions based on voice or text analysis, but they don’t have emotions.

Can AI replace human jobs?

AI has the potential to automate certain tasks, but it’s unlikely to replace humans entirely. Instead, it can enhance productivity and allow humans to focus on more creative and complex tasks.

No responses yet